Whether you’re building a product from scratch or working on a beloved app, every design decision you make carries risk, that's why you need UX testing methods for decision making. The bigger the design proposal, the bigger the risk. Ideally, you and your team are right about the problem space as defined in the creative brief and about your solution. But as we know, teams rarely nail anything on the first try. Great UX design involves a lot of learning and experimentation.

With every potential gain or improvement comes the possibility that you’re wrong. Best case, your design makes or exceeds its projected impact. Worst case, your team wastes valuable time and resources or loses customers and money. Luckily, you don’t have to just release software and hope for the best. We have many tools at our disposal to test that we’re making sound design investments.

This post breaks down 10 UX testing methods you can use to increase confidence that you’re investing in the right direction. Note that these are oversimplified here for reference and it’s best to read the resources to gain a more thorough understanding of these practices.

Discover how to test UI & UX design and forget about second guessing yourself!

Testing Plans

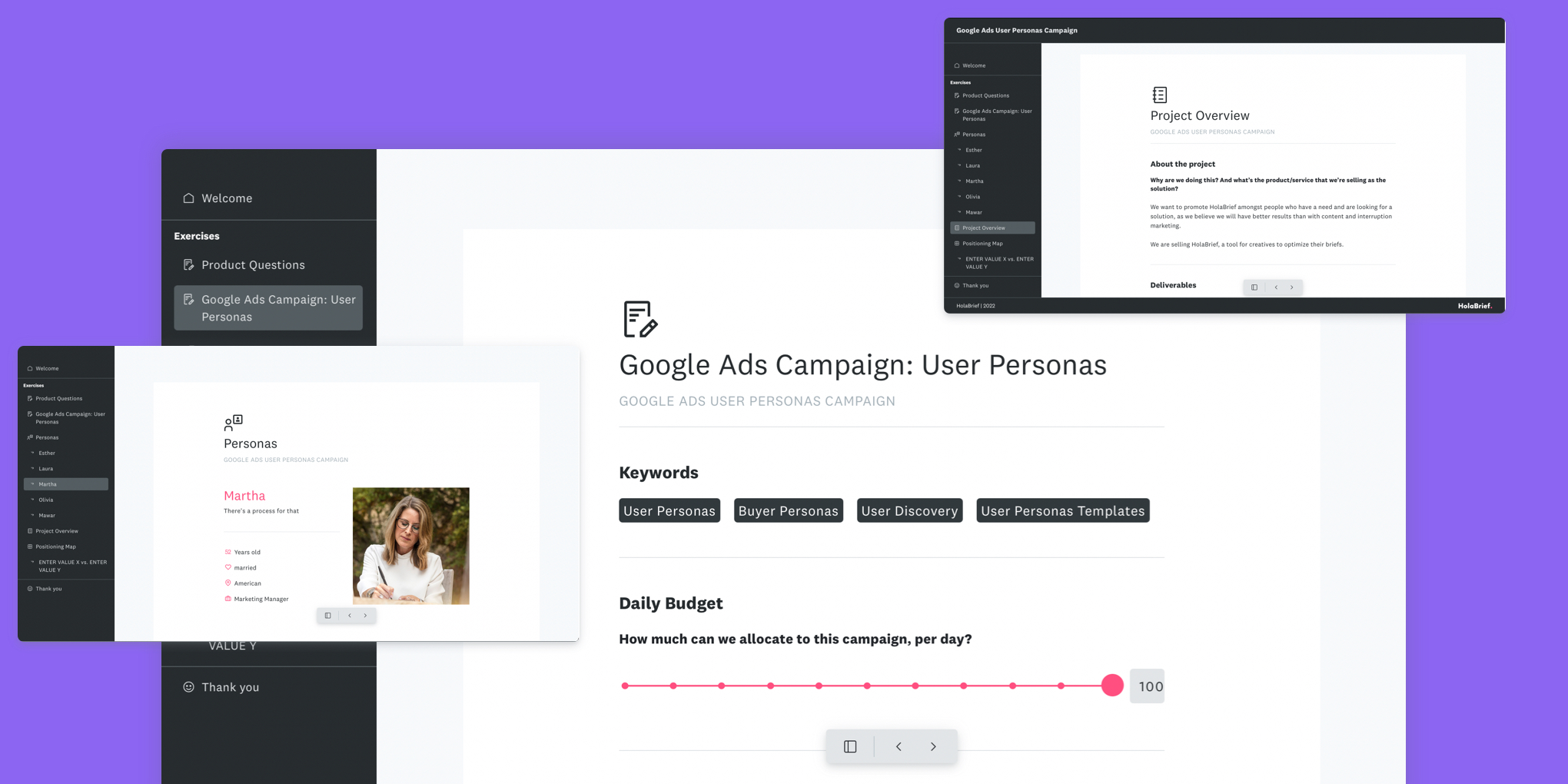

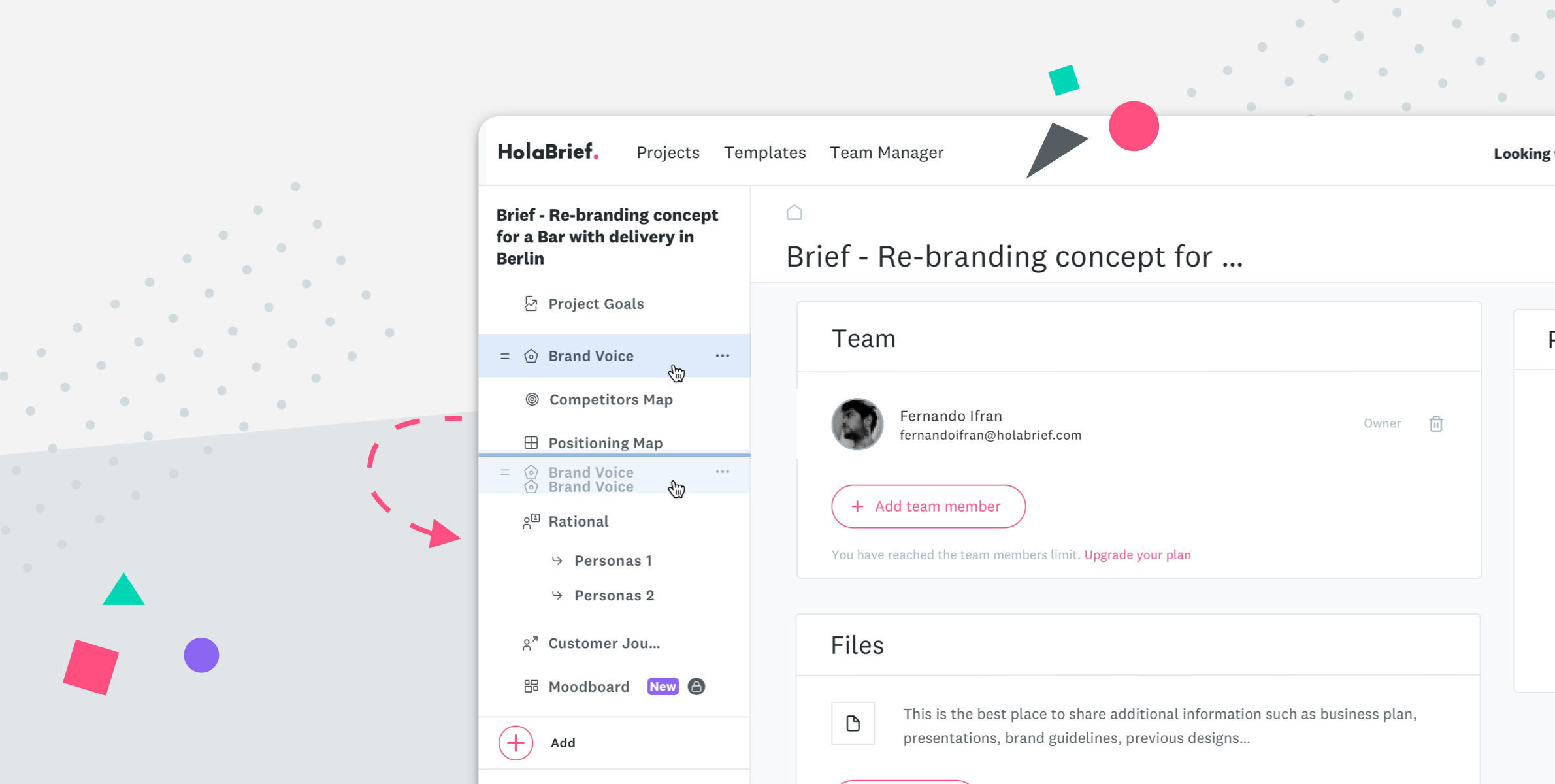

As you define how you’ll test a design idea, start a UX/UI testing method where you capture all the key aspects of your test.

Testing plans are like creative briefs that capture the design of a test, all the thinking that went into it and everything that you learned.

The creative brief set up before the design process holds valuable information on the problem setting of the project. Keep this in mind, as it should be your guide throughout the whole design process to refer back to whenever a design decision is made. So whenever you are testing a design decision, find out of the outcome of the testing aligns with the problem setting of the brief template.

Every UX testing plan should include a testable hypothesis, how is your testing method, how you will test your hypothesis, and what you’ll measure to determine a winner. It’s also helpful to include images of the customer experience or UI design changes along with detailed instrumentation of how success is measured in the test method. After the test is complete, you can add the results, what your team learned, and how you’ll move forward. No need to obsess over this documentation but I personally find the process of writing all of these aspects out facilitates important team discussions and reduces errors in the tests.

Download the Validation Plan Template.

Testing Method 1 - Problem Interviews

Ash Maurya describes this foundational technique in his book Running Lean. This testing method only works if you’re solving a problem. It’s not as useful for products or features that are minor optimizations or nice-to-haves.

Good for:

- Ensuring people have the problem you’re solving

- Ensuring you know who those people are

- Validating your marketing channels

How to do it:

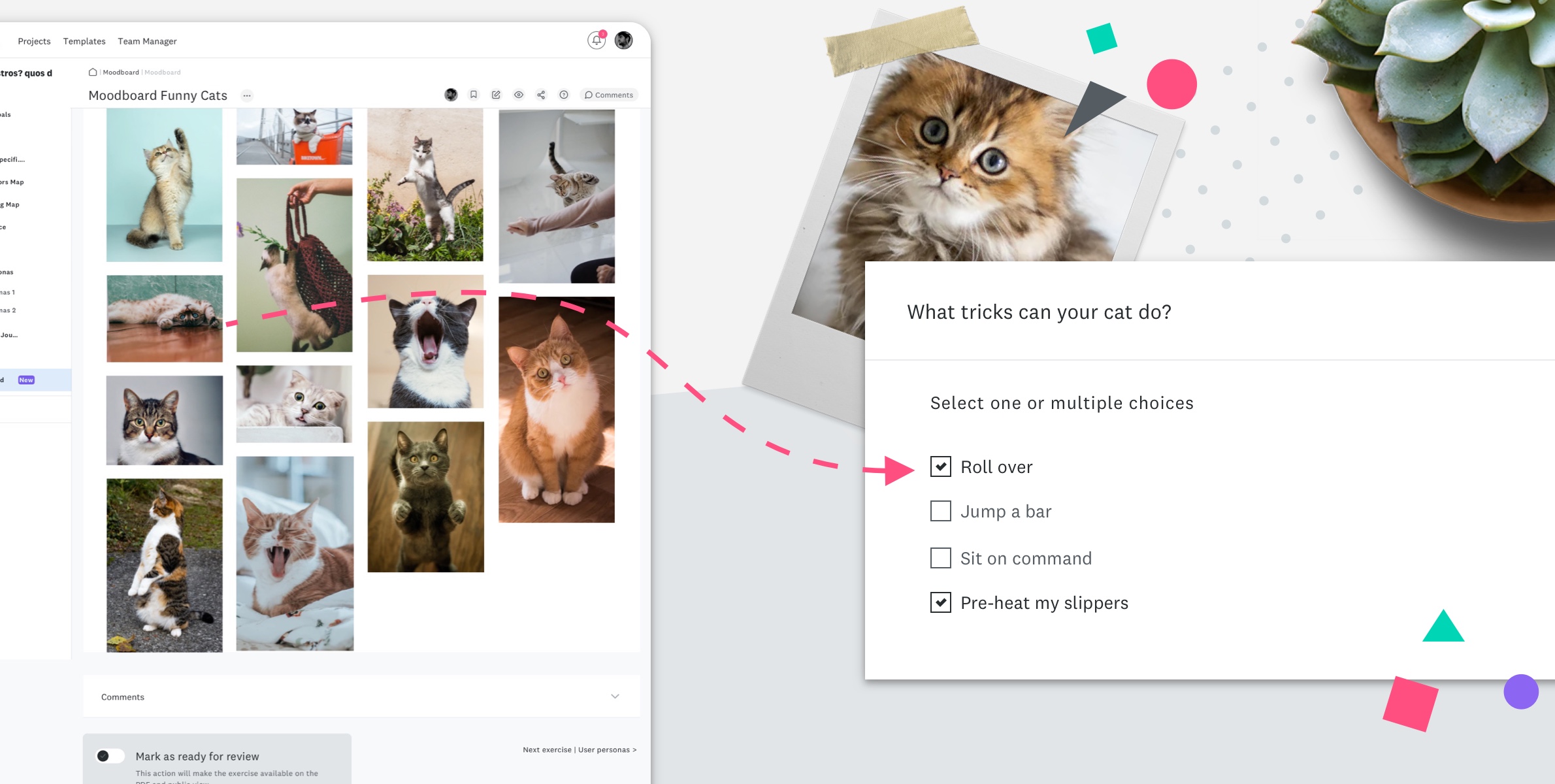

- Create proto-personas of the people you are solving for with the help of a user persona template.

- Write a discussion guide focused on the problem you’re solving for them (not on your product).

- Optionally, include two scales on your discussion guide - one for how much your interviewee aligns with your proto-persona and one for how much your interviewee experiences the problem

- Source people in line with your proto-persona (this is how you validate your marketing channels. If you can’t find people to interview, how will you market this product or feature to them?)

- Interview them

- Synthesize the interviews. Look at how your interviewees scored on your two scales. Using the anecdotes you heard, assess how much evidence you have that your target customer experiences the problem you’re solving.

What it validates:

- Your target customer (which may be existing or maybe a new market)

- The problem you’re solving

- Your marketing channels

Resources:

Testing Method 2 - Value Surveys

There is much debate about whether or not surveys are a reliable UX/UI design testing method but they are an option to consider, especially when short on time. Anthony Ulwick’s outcome-oriented survey technique allows you to develop more confidence in the value you’re creating.

Good for:

- Gaining more confidence about what your customers value

How to do it:

- Conduct problem interviews

- Articulate what your customers want to achieve (aka outcomes)

- Create a survey that asks your customers to rank the outcomes by importance (how much they matter to them)

- Analyze survey results and prioritize outcomes by the importance

What it validates:

- The problem you’re solving

- How valuable solving that problem is to your customers

- What existing alternatives people are using now and how much they solve the problem

Resources:

- Anthony Ulwick’s HBR article “Turn Customer Input into Innovation”

- Strategy's page on Concept Testing

Method 3 - Landing Page/Announcement Tests

Good for:

- Stronger validation of your marketing channels

- Building an interest or pre-order list

How to do it:

- Create a marketing plan around how and where you plan to reach your customers

- Define how much to share about your product and what kind of interest you want to see (sign-ups, emails, pre-orders, etc)

- Design your messaging and capture mechanism (a landing page, an email campaign)

- Launch it and start marketing

What it validates:

- Your marketing channels

- Demonstrated interest in your value proposition

- UI design process

Resources:

- “How Dropbox Started As A Minimal Viable Product” by Eric Ries

- “How to successfully validate your idea with a Landing Page MVP” by Buffer CEO Joel Gascoigne

- “From Minimum Viable Product to Landing Pages” by Ash Maurya

Method 4 - Feature Fakes

If you have an existing product, you can start building a feature without building the entire thing. You can use feature fakes to see if customers will even try to access what you’re designing. If they won’t tap or click on something, it may not be worth building the actual feature.

Good for:

- Testing discoverability of a feature

- Gauging interest in a feature

How to do it:

- Define your feature thoughtfully

- Design the indicator that allows people to access your feature (for example, the button or toggle or message)

- Build the indicator

- When people hit the indicator, show them a message that explains why the feature isn’t functioning. You could let them know it’s not available yet and save emails for notification later or you could show an error message and give them a way out.

What it validates:

- That your feature is discoverable

- That people are interested in trying it

Resources:

- “Fast, Frugal Learning with a Feature Fake” by Joshua Kerievsky

- “Feature Fake” by Kevin Ryan

- UX for Lean Startups book by Laura Klein

Method 5 - Usability Testing Methods

Usability testing methods are often mentioned but (seemingly) sparingly practiced. They are great for making sure people understand how to use something you’ve created but they won’t tell you if people like your product if they’ll use it or if they’ll pay for it. That’s what the other techniques on this list are for.

Good for:

- Ensuring people can use the thing you’ve created

How to do it:

- Write a list of critical tasks you want people to be able to do

- Create a test plan

- Create a prototype

- Ask people to complete the tasks without guidance

- Observe their ability to do so

- Score the tasks by each person’s ability to understand and complete them without guidance

What it validates:

- Whether or not people can use your product (Note: it won’t tell you if people will use your product just that they can)

Resources:

- "Four Steps To Great Usability Testing (Without Breaking The Bank)" by Patrick Neeman

- “A Comprehensive Guide To User Testing” by Christopher Murphy

Method 6 - Feedback Follow-ups

Existing products and services receive customer feedback through various channels. Smart product teams are spending a lot of time close to their customers, understanding what’s working and what’s not. When you identify opportunities using their feedback, you can go back to those same customers to get their thoughts on your solutions or improvements.

Good for:

- Building bi-directional channels for customer feedback

- Deepening your understanding of what your customers want/need

- Strengthening your UX UI design solutions

How to do it:

- Identify a high impact pattern in customer feedback

- Reach out to the customers who provided that feedback and interview them to learn more

- Define the opportunity and how you’ll address it

- Once you have a design proposal, write a discussion guide to understand if you’ve addressed the customer concerns and if they can comprehend your solution.

- Reach back out to the customers on your list and interview them

- If necessary, improve your solution

What it validates:

- That you’ve solved the problem you identified through customer feedback

Resources:

- “Customer Feedback Strategy: The Only Guide You'll Ever Need” from HubSpot

- “11 Founders On How To Best Listen To Customer Feedback”

- “Basecamp: How To Build Product People Love (And Love Paying For)”

Method 7 - AB and Multivariate Tests

AB testing is one of the most commonly used methods for teams with existing products in the market. Multivariate tests (testing more than one variable in different combinations) can be also an efficient way to learn. Both techniques are easily overused and abused. Often, you can use another technique to learn before reaching for this method.

Good for:

- Helping a team decide between two similar directions

- Derisking small optimizations to your product

How to do it:

- Define a change or set of changes that test your hypothesis. Make sure they drive behavior directly to the metric you want to move. (i.e. make a change to sign up screen, see this behavior on sign up screen)

- Choose your target audience and traffic sizes (e.g. mobile-only, customers who x, 5% of all traffic)

- Design and build those changes using a flag or other method for conditionally showing variations to users

- Instrument your key metric (the main metric you want to shift) and any contributing metrics you want to monitor so they appear in all of your AB testing and analytics tools.

- QA your changes and your instrumentation

- Use an AB testing platform to split traffic evenly among your variations

- Collect and analyze results

What it validates:

- Which version is better at moving the metric you want to move

Resources:

- “The Ultimate Guide To A/B Testing” by Paras Chopra, founder of VWO

- “The Complete Guide to A/B Testing: Expert Tips from Google, HubSpot and More” by Shanelle Mullin on the Shopify blog

- “Multivariate Testing vs A/B Testing” in the Optimizely glossary

Method 8 - Price Tests

Price testing is becoming more personalized and dynamic as data intelligence gets more sophisticated and scary. The simplest price tests are basically AB tests of pricing. More complex tests target specific user behavior to optimize prices contextually.

Good for:

- Optimizing pricing

How to do it:

- Complete a competitive analysis of your pricing

- Define your pricing test range

- Research and define how you’ll test the pricing. (For example, on iOS, you can’t always put two pricing variations in the App Store. You may have to run introductory pricing, free trials, or use a web landing page to test pricing.)

- Run an A/B/n test with your pricing changes (You can either actually charge different amounts or you could run a price test as a feature fake or as a pre-order and not actually take money.)

- Collect and analyze results

Note - you can also run a price test more qualitatively by showing customers prices for items to get their reactions. This is less reliable than having people pay for things or indicate that they are trying to pay for something but it’s better than not learning anything at all.

What it validates:

- How many people will pay for a product/service

- Who will pay for that product/service

Resources:

- “The Good-Better-Best Approach to Pricing” by Rafi Mohammed

- “Target ramps up in-store personalization on mobile with acquisition” by Chantal Tode

- “Stop guessing! Use A/B testing to determine the ideal price for your product” by Paras Chopra, founder of VWO

- “How To A/B Test Price When You Have A Sales Team” by Dave Rigotti (This is a very old post by internet standards but there’s a lot of great considerations in it)

Method 9 - Blind Product Tests

The classic Pepsi vs Coke test - how does your product stack up against a competitor’s? Or, how do your competitors rate against each other? You can hide all branding and leverage your competitors to better understand how to differentiate.

Good for:

- Finding out how different your product or service really is

- Learning customer attitudes about competitor products

- Learning how usable competitor products are

How to do it:

- Identify competitors to include

- Determine what you’re testing for (is it attitude or task-based?) and create a discussion guide along with any scales you’ll use to score interviews

- Create a prototype or visual reference

- Remove competitor branding so it’s anonymous

- Recruit target customers

- Run them through the prototypes or visuals

- Score each interview and analyze sentiments

- Identify opportunities for improvement in your product/service

What it validates:

- Attitudes about competitor products/services when a brand is not a factor

- Attitudes about your product as compared to competitors

- How usable your competitors’ products are

Resources:

- “Blind And Branded Product Testing Can Tell Completely Different Stories.” on the ACCE blog

- “Measuring Simple Preferences: An Approach to Blind, Forced-Choice Product Testing” academic paper by Bruce S. Buchanan and Donald G. Morrison

Method 10 - Rollouts

If all other validation methods fail you, there are always rollouts. Sometimes, it’s not worth building the fidelity you need to have a high confidence test and it makes more sense to ship something and monitor its performance. That’s where rollouts come in.

Good for:

- Any software release that carries risk (so probably most of them)

How to do it:

- Set up a tool to manage production rollouts. For the web, you can use an AB testing tool or you can use a feature flagging tool like LaunchDarkly or Rollout.io. Apple and Google both have this built into their platforms.

- Define what metrics/information you’ll watch to determine if your release is successful and to roll it out to a larger percentage.

- Add the flag to your code

- Deploy your code and set your rollout criteria

- Roll it out

- Review your metrics and make a call whether to roll out to a wider audience

- Repeat until it’s at 100% or you decide to halt the release because of an issue

What it validates:

- That your release isn’t damaging the current experience or metrics

- Possibly that it’s having its intended impact (this will depend on how much you can isolate the release from outside variables like marketing efforts or other teams’ work)

Resources:

- “Feature Toggles (aka Feature Flags)” by Pete Hodgson

- “The Ultimate Feature Flag Getting Started Guide” from the Rollout.io blog

- Use Cases on Launch Darkly’s website

Closing Thoughts

These are just a fraction of the ways you could test your ideas or execution.The important thing is to continually challenge your team to figure out how to know you’re right about something faster and with the least amount of resources wasted.

No matter which testing method you choose, you may find it helpful to flesh out the vision for a design before deciding what makes sense to test. This doesn’t mean you spend months perfecting an interactive, high-fidelity prototype. Most importantly, you define the desired end state so you have an idea of where you’re heading. This could be as low fidelity as a journey map or a sketch or more detailed like a workflow or a prototype. You just want to think through enough of this hypothetical future so your team has a shared understanding of the pathway to reach the ultimate goal. From that thinking, you can then identify the biggest risk areas or elements you need to test. Prioritize those areas by impact and effort and select your testing methods.

One last word of advice for those who are concerned that testing a fraction of your vision may not be legitimate. If your team really believes something will have an impact or you have existing evidence that it will, referring back to the problem setting of the creative brief, run more than one validation test. Don’t just do one test and call it a failure. Run multiple types of tests. Work to reduce bias and errors. It may honestly be worth building it all, but nearly all significant design plans can be broken down into smaller layers to test. Consider the tradeoffs of investing in something that you may be wrong about versus everything else you could be allocating resources to.

Teams that learn faster are successful faster. They spend less time building stuff no one wants and they can identify more clearly what’s working and why. The slowest way to learn is to build the entire vision for something and release it all at once.

Choose your methods wisely. Be the team that learns quickly.